Software Scaleup Gains Performance Insights Into Distributed Software System

Using streaming analytics, NextPax obtained continuous insight into performance, tracing, and intuition into the complex pipeline of their core product.

The Goal

Improve performance and gain insight into a distributed software system, insights needed were performance characteristics like runtime and number of times ran per job.

The Challenge

Create a solution to create data and analyse it to track the performance of the distributed software system generating billions of data points per day.

The Approach

Create a dashboard based on an event based stream processing pipeline with Apache Kafka to perform tracing and calculates the performance statistics in real-time.

- Sector

- Travel & Hospitality

- Use Case

- Application Monitoring

About NextPax

NextPax is an international channel manager in the travel industry with a distribution network to different channels like booking.com, AirBnb and HomeAway. As a channel manager, they help all kinds of homeowners: connecting property managers and property manager systems to various channels, allowing properties to be booked on different channels. The Channel manager software that NextPax has developed proves vital in this mission.

Millions of updates per day

As NextPax has grown to one of the biggest channel managers in Europe, their software processes data of millions of properties per day. Alongside development their software system has grown substantially and with this also it’s complexity. One of the paramount features of the software of NextPax is to provide timely updates to all the connected booking channels. To tackle this high load, the system is a highly scalable distributed architecture that has many moving components. Additionally, to further grow into other markets and provide a seamless experience to the end user, the system performance has to be preserved and improved whenever possible.

Because of this, we had the following two challenges:

- Gain more insight into the pipelines of the software system, such that NextPax can monitor the performance and inspect and investigate any issues that come up.

- Find and improve the performance of the most crucial components of the system

Because of the distributed nature of the software system we were dealing with, a simple logging solution would not suffice.

A logging solution would allow us to monitor the performance of individual jobs and servers, but not the system as a whole.

The system is compromised of a pool of worker nodes that processes jobs from queues, and these jobs are linked together.

The main challenge in gaining insight is the challenge of tracing a series of jobs

(running on any number of different linux processes and servers) into one client facing process.

Similarly,

improving the performance of a distributed system with many components is challenging,

as the question will always be where to start, like finding a needle in a haystack.

Event Streaming & Distributed Job Tracing

As we were investigating a potential solution to the proposed challenges, we quickly isolated a few overall requirements for the project:

- Low overhead: since it will affect all jobs

- Application-level transparency: to keep requirements to the existing software to a minimum

- Scalability: as this tool should collect data from all nodes in the cluster

From these requirements and the goals listed, we worked on the following use cases:

- Track for each type of job its impact on overall performance to structurally improve system performance

- Trace jobs back to the original job that dispatched them to validate timely updates.

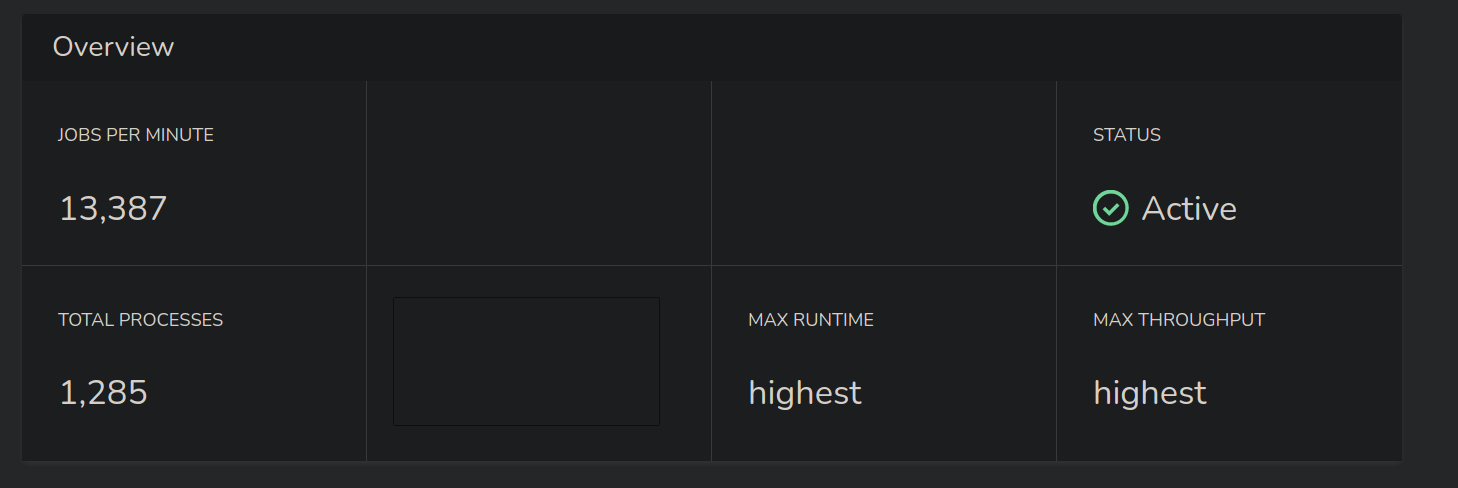

As a result, we build a highly scalable streaming analytics pipeline based on Apache Kafka, which handles various events that we introduced into the channel manager software. This enabled us keep up to speed with the 1000s of datapoints coming in every second, while computing the analytics in real-time. The metrics were subsequently exposed as an API to the original system, where we built a flexible dashboard with real-time insights.

Results

- Substantial insight into their software system: Allowing them to further monitor and optimise their software, which has an important impact on the quality of the data on the various channels.

- High impact code optimisations in the most crucial algorithms of the system. Speeding up the processing of jobs by 3 times.

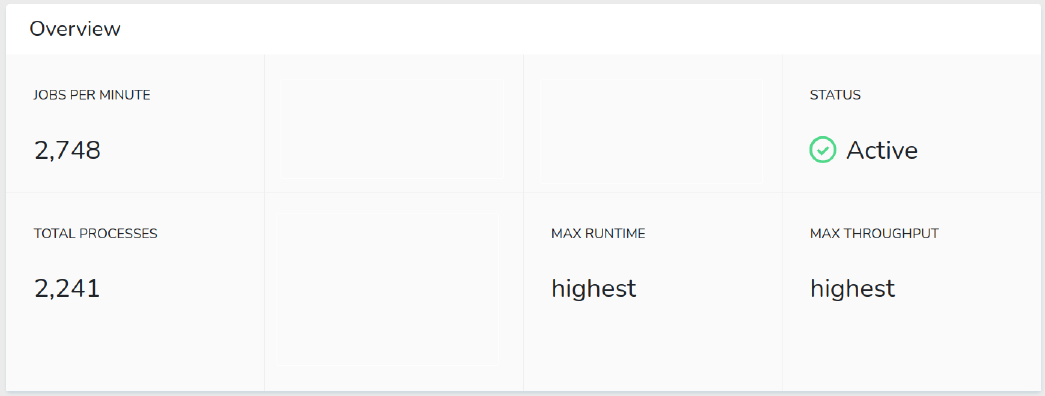

Below shows the horizon dashboard, which gives an overview of the jobs being processed by the platform. The first picture shows a snapshot of the situation before we started, and the situation after (the dark screenshot) our critical changes which improved the jobs per minute by a factor of ~3-6X.

Final presentation

At this presentation we presented the results of the project with some future recommendations to further improve the platform in terms of performance.

“I want to thank the BiteStreams team, and especially Donny Peeters & Maximilian Filtenborg, for delivering our tailored ‘performance analytics solution’ that will add a lot of value to our NextPax customers!”