Donny Peeters

Donny Peeters

Save yourself from inconsistency and complex migration plans by watching out for these data modeling issues.

What do databases and garages have in common?

The bigger they are, the more we collect.

It’s a sad but reoccurring theme. You have double the memory on your new laptop? Chrome happily takes half. Your pictures max out your 64 GB phone, so you buy a 256 GB model? Well, those new 4k images cost 4 times the storage. Bumping PostgreSQL from version 9.6 to 15 improves the max transactions per second with almost 50%)? Great, we’ll sign up 100% more users next year.

It might be tempting to think databases escaped this data creep. As this talk jokingly states:

In the 80’s working on performance looked like this: sipping mojitos at the beach waiting for Moore’s law to catch up.

But that would be naive. At large scale, every structural performance gain will stay relevant. In other words: if you find a way to improve performance without technical debt, it will outlive new technology.

Every structural performance gain will stay relevant.

But what is there to control? As companies learned that their bulky report queries require some care, they moved data to databases tuned for analytical workloads. Suddenly, they could tune the schema’s for analytical queries as well. You control the data model used to meet the new requirements for this system.

Enter dimensional modeling. This data modeling technique that favors easy and performant analytical queries over normalization. After all, the primary goal of normalizing your data model is preserving (relational) integrity and reducing redundancy. But your data warehouse has no uncontrolled writes; source systems handle those. Hence, source systems (should) preserve integrity. Pipelines can just be restarted.

Still, dimensional modeling goes against the instincts of the seasoned modeler, especially at companies starting to build a data warehouse. So, in this second part of this two-part blog series on data modeling, I will discuss 7 dimensional modeling pitfalls to avoid in your own data warehouse.

But then we have duplicate data in dimension..

We might introduce inconsistency when we add or change entries in this table.

With dimensional modeling, you’re trying to serve data scientists creating insights or management trying to keep a pulse on the business. This changes performance requirements. As described above, the constraints change in your data warehouse: this free ticket to read-performance optimizations lies at the core of dimensional modeling.

As a result, 3NF is no longer needed. Prepare your data using fact and dimension tables to serve a powerful BI dashboard or easily join into CSV exports. Recognize when the warehouse is given responsibilities that belong in the operational system.

Fact and dimension tables are at the core of dimensional modeling. However, identifying the facts and dimensions is not always trivial, an obvious pitfall being not knowing where to draw the line between them. Are orders my facts? Or order items? Does the order creation time belong in a dimension table like the order creation date?

Making the wrong choice means wrestling with too complex dimension tables, having to join the fact table with itself, and calculating important business metrics using subqueries for the dimension table. As a rule of thumb, fact tables mainly consist of numeric measures in the form of foreign keys and (semi/non) additive fields.

Yeah so most of the dimension records still use the old category names, except the new ones

One eye-opener for me was learning about slowly changing dimensions. Often, the source tables in your dimension tables are subject to in-place updates. It is notoriously difficult to consistently keep track of these changes while keeping the data tidy and actionable. In The Data Warehouse Toolkit, Kimball describes several ways of dealing with these in the dimension tables themselves:

Type 1: Overwrite

Type 2: Add a New Dimension Record

Type 3: Add a New Field

Each type has its own challenges.

Type 2 requires surrogate keys for instance, since you have multiple versions of an entity, each having the same primary key.

Type 3 can create very wide dimension tables if not done carefully,

but you can also decide to limit yourself to saving only the 2 most recent versions.

An example would be keeping track of a user’s address and previous_address.

This data is usually lost on an operational level, so it truly adds a new perspective on your company data if this aggregates in the data warehouse.

When I add up all clients the number does not match the total!

This one is a classic.

In a GROUP BY category statement, rows where category is null are ignored.

Consequently, histogram-like views or aggregated tables show the right numbers per category,

but they don’t add up to the right total because of the missing rows.

A simple fix for this is to replace null by placeholder values such as UNKNOWN or MISSING,

which will ensure the totals add up again.

This also gives a more realistic view of the data in cases where these could actually be missing,

which might have the benefit to push stakeholders to improve data quality.

A general rule is not to allow null’s in your dimension attributes to avoid this in the first place.

Strange, whenever I filter on this field I get inconsistent results…

The foreign keys in your fact table allow users to slice and dice information from the fact tables using the dimension tables.

But perhaps some facts do not point to a reasonable dimension row.

Making the foreign keys nullable increases the risk of inconsistency whenever you decide to group by one of them.

Or worse, you neglect all the facts with null references when you join on the dimension table -

an easy mistake since the default join strategy in most relation databases such as

PostgreSQL

and

MySQL is an INNER JOIN.

One solution is to create a default row in the dimension table to which you point these nulls instead.

The alternative is to make sure you’re always doing a LEFT JOIN,

but then your BI solution will dictate the technical difficulty.

We cannot implement Type 2 slowly changing dimensions as this will mess up the primary keys..

Unfortunately, we should be careful updating primary keys because of our data warehouse

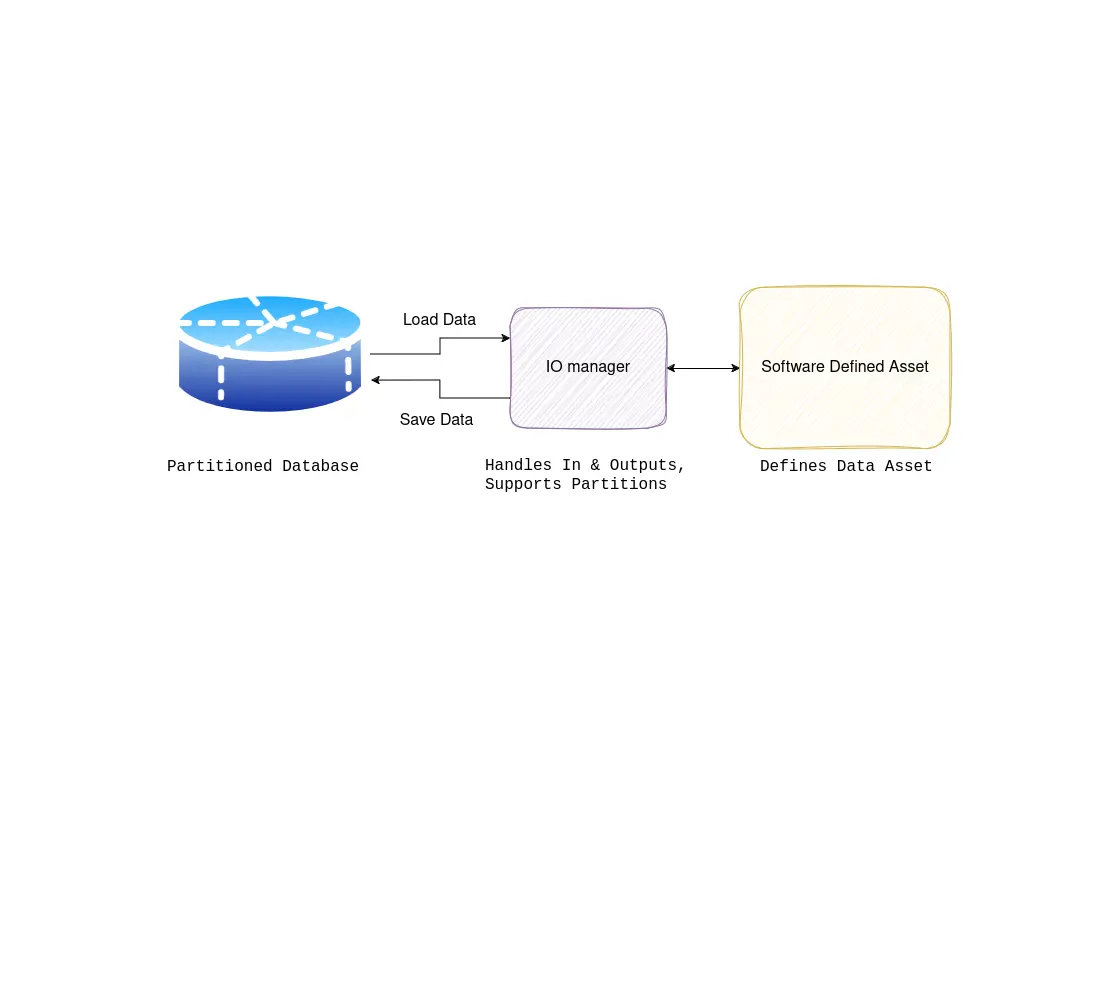

As redundant as it looks in the first iteration of your data warehouse, it is a good idea to invest the time into creating surrogate keys (SKs) to use as primary keys in your data warehouse. As the quote and tip 3 explain, one use-case you cannot implement without them is type 2 slowly changing dimensions. But other instances where this proves to be useful is when the source systems change their primary/natural keys. Without SKs, this will not only impact the table that changed, but also all tables referencing this table. That is to say: you will probably need to migrate one or multiple fact tables in the process.

In short, SKs provide looser coupling between operational source systems and the data warehouse. And loose coupling often shows its power in the long run.

Who’s going to focus on figuring out how week numbers work?

Does anyone have a list of national holidays laying around?

In most data warehouses, you’ll find a date dimension table. Time range filters are used regularly, as business users are always interested in trends, comparing this month’s results with last month’s or looking at the year-to-date (YTD) statistics Time is also one of the primary axes in most charts, meaning a time range is often applied even the first time a user sees some data.

Since the date is probably the only dimension table all businesses share, many sources provide a proper date dimension table out of the box. For instance, dbt has a package dedicated to such a dimension table. Multiple resources showcase how these tables can be generated. Leverage these resources and tools and don’t reinvent the wheel!

When I told someone about my idea for this blog, he said: “Shouldn’t we be putting everything in One Big Table nowadays?” And looking at those storage prices, he has a point. But how do you create a proper OBT? Most of these points still hold, as they focus on getting proper query results, reduce coupling to the source systems, and provide guidelines for use-cases that apply to both approaches. Perhaps the best way to create an OBT is first to create a star schema and join in all dimension tables. And when you do, know that in spite of all the pitfalls you will avoid, just like your garage, you will probably have to clean up again in 3 months.

Donny is a mathematician who started freelancing as a software/data engineer before co-founding BiteStreams. He can quickly spot design quirks and adapt systems to business needs. In his free-time he likes to work out, read and grab a drink with friends.

About usEnjoyed reading this post? Check out our other articles.

Donny Peeters

Donny Peeters

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg

Maximilian Filtenborg